Wow 3.1/2 weeks have passed since I last posted. But I have not been idle, during this time I revamped and resorted the old tests and created 5 new ones and played quite a few test games together with Eric who helped me a lot with his bug testing and game playing. This is going to be a long post so be prepared to be bored to tears. Before sharing these new test games renamed as Renaissance School as that was the period during which these games were played. I want to explain why I am creating these tests and the below will hopefully explain it. A lot of you here in the Forum know I have a pretty sizeable collection of dedicated chess computers and chess programs.

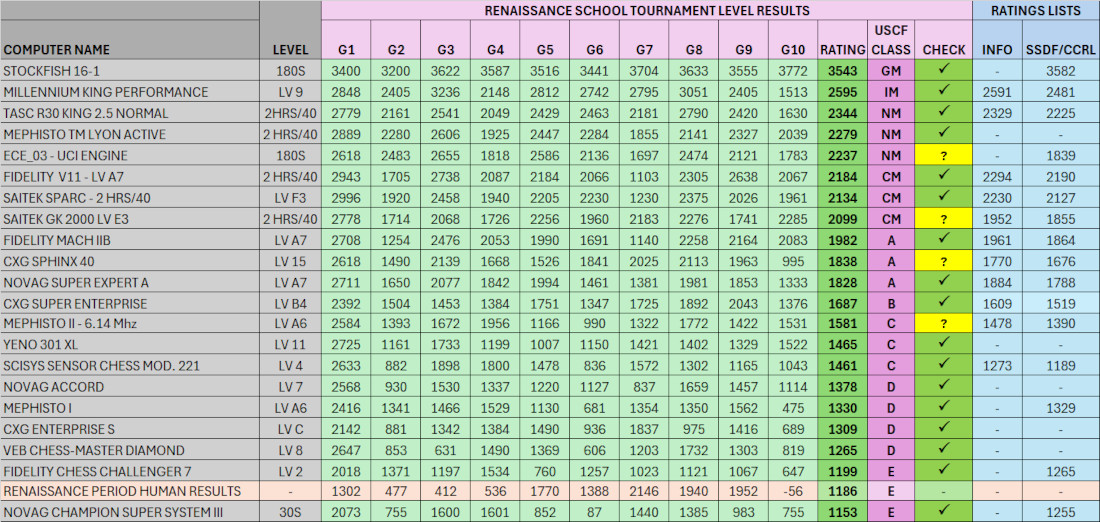

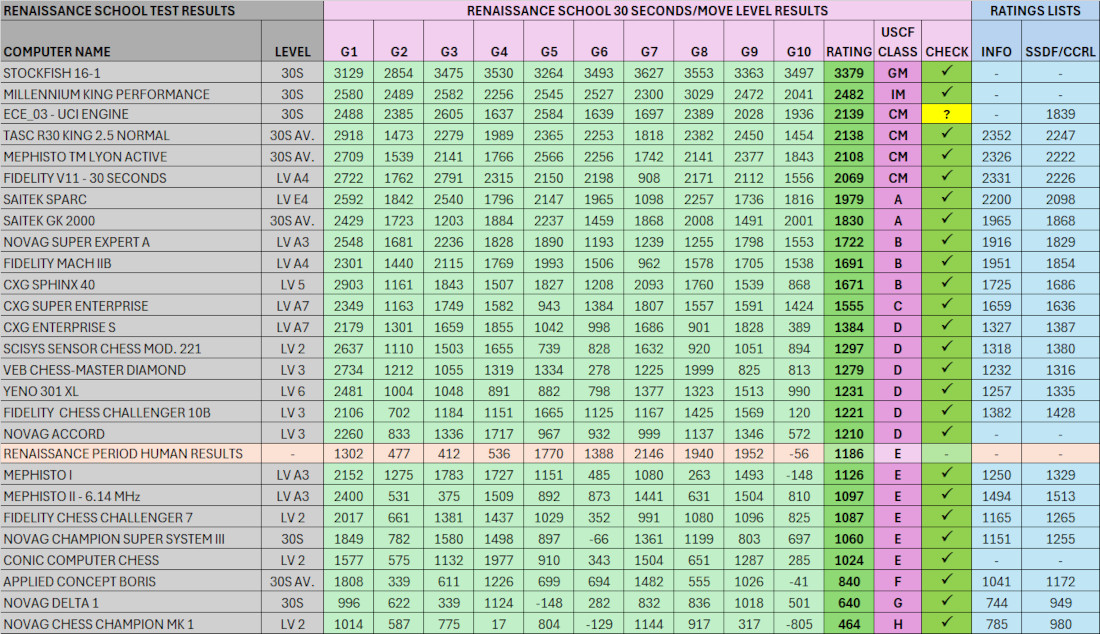

This is a test comprising of 10 games totaling 415 moves with 208 White moves and 207 Black. What makes this test so interesting for me is that Eric and I played 16 chess computer programs at Tournament level through these 10 tests, all playing 415 identical positions. And we also played the same at 30 seconds per move. With Eric currently working on adding another 6 computers.

If you were to do the math and these 16 computers were to play each other 10 times, then you would have to play a total of 2400 games at Tournament level and 2400 at Active 30s/move level compared to just 160 tournament and 160 active test games. And you would still not be able to compare what computer would play which move in any given position without going back and studying the games played and then going back to those computers and setting up your test positions and then seeing who would have played what.

Now just imagine you have for example a collection of 500 chess computers, and you wanted to play them all against each other 10 times to see for yourself how they rank. At 3 minutes per move it would be impossible as this Tournament would be 499 times 500 times 10 equals 2,495,000 games if my math is correct times and estimate 3 hours at the very minimum per game equals at least 7,485,000 hours. There are on average with leap year 8766 hours in a year with no sleep which means you would have to play as 1-person 24/7 for 854 years and never sleep or do anything else.

Take Schacomputer.info as an example, they just updated their Tournament list and added 5220 games since their last update which I don't know when their last update was made. But in total there are now just over 18,000 games collected over an approximate period of 25 years with 189 computers on the list, never mind 500. All that is a massive amount of effort and dedication from the players. I don't know how many people play and send in their games. But it’s a huge amount of work and commitment and kudos to those players.

Since I am a collector of chess computers, you can perhaps now see why I am interested in some good methods of rating every one of my computers and favorite chess programs, be it DOS, PC, Commodore, Tabletop and comparing them in an apple-to-apple exact comparison. I want to be able to see the results in this lifetime.

So anyway, even if I must do this alone, now that I am retired you can do the math, I can easily complete this, health permitting without breaking into a sweat but cheating a little and playing with two or three computers at the same time. Since the games are short in comparison to a full tournament match game, I can casually complete 2-3 computers a week and therefore in possibly less than 4 years I would have the data for 500 computers at both tournament and active level and any shorter level that might be of interest i.e. 10 sec per move. Instead of taking 1,000 years.

However, just imagine how quick all this would be if several people played these tests with their computers and the results were recorded and shared by everyone. Those 4 years might quickly turn into less than a year and everyone is in data heaven for chess computer comparisons.

To summarize, this has been my driver to create some workable accurate test that can compare every chess program so long as they have a move take back option and every human who might be interested in seeing how they rate.

Eric has been of great assistance with his games and spreadsheet testing, finding any human errors I have made on the spreadsheets and his suggestions on improvements such as the “Sneak a Peek” feature I just added. Therefore, a big thank you to Eric.

I won’t bore you more with the many different things you can achieve from the data of these tests, but do I think these tests rate accurately?

Well, the more test games you have as in regular computer games that you play the more accurate the average final ratings become. But within this 10-game universe the ratings are for sure 100% accurate, they cannot be otherwise since everything is rated 100% the same way in 100% the same move by move game positions.

SF16-1 scored 3543 ELO and King Performance 2595 and Chess-Master Diamond 1265. It’s not made up it’s how they played these games.

Now back to these tests.

Above you will see the games played so far by Eric and myself at Tournament level. I have check marked the results that match pretty well to other rating lists and question marked the ones that are a little high. The hard work was trying to figure out the scoring calculations and automate them so that it matches what the human chess player are used to comparing themselves with. The game scoring itself based on the moves played is all over the place but when combined as 415 moves, they do create a pretty good ELO comparison and of course more test games would make this even more accurate. You can see from the individual test games how up and down each and every computer performs as they are all programmed by their creators differently. I mean who would have thought that V11 plays like a beginner in Game 7 and like a Grandmaster in other Games. Some games are just more complex than others for chess computers.

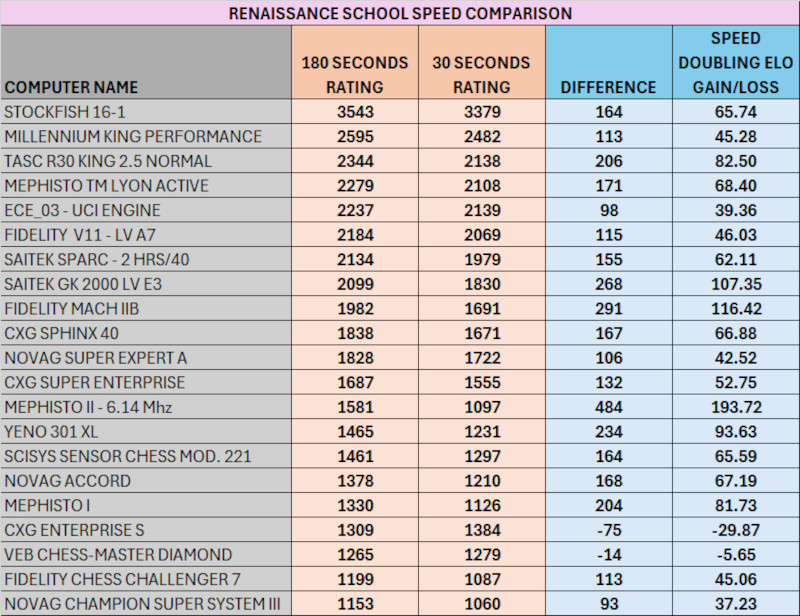

Playing the same test at 30 seconds per move you can see how their skill level declines. Technically it is wrong to think a player is playing at for example 2200 ELO in Blitz when the game quality for all to see is at a level of 1800. These tests will show the difference with few exceptions. Some computers just strike lucky and score better but it's mostly timing. The base line for these tests is Tournament Level. More test games will likely eliminate outlying high or low scores.

This test will very accurately show the improvements/decline of most computers (excluding any lucky ones) in their performance by adding/reducing time.

Lastly you can see on the list that I searched and found a weak chess program listed at CCRL at 1839 ELO. I ran it through these tests, and it scored as expected higher. In the past I don't know how many times I tried to play an engine against a dedicated and the dedicated was embarrassed every time because of the speed of the engine. So, I tried different handicaps like slowing the engine down. Well, doing the test with ECE_03 at full laptop speed 1 core and just reducing the hash to 1 MB (that's maybe too low and experts may suggest a higher hash for testing) I then played it at full speed against TM Lyon and TM Lyon won 2.0 as its higher Test rating result confirmed. This is the first time I have ever at a first attempt without searching and searching and playing and slowdowns have both playing their natural speed and the dedicated computer won. So that opens up a lot of doors for some great future tournaments.

Here are the games:

Game 1: https://lichess.org/yvyS5bo4

Game 2: https://lichess.org/9LWIscv6

I am finally done with this lengthy post and all that is left is to give you this link to download the zip file for the 10 test games that includes the tests played so far.

https://www.spacious-mind.com/forum_rep ... School.zip

Best regards

Nick